Introduction

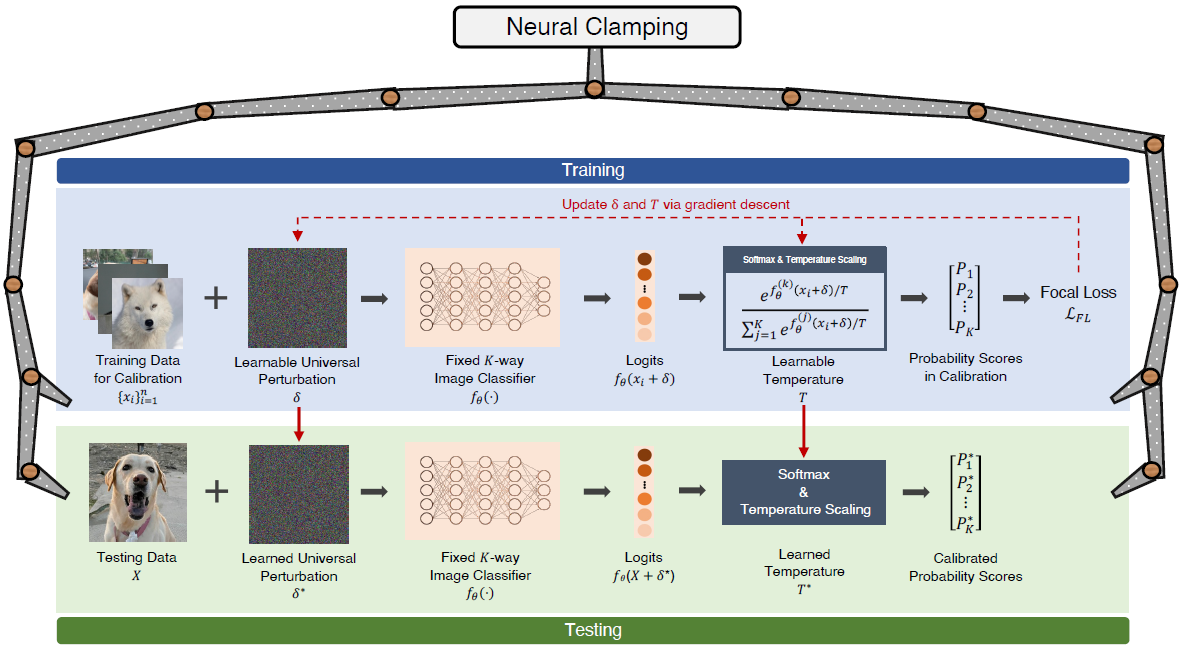

Neural network calibration is an essential task in deep learning to ensure consistency between the confidence of model prediction and the true correctness likelihood. In this demonstration, we first visualize the idea of neural network calibration on a binary classifier and show model features that represent its calibration. Second, we introduce our proposed framework Neural Clamping, which employs a simple joint input-output transformation on a pre-trained classifier. We also provide other calibration approaches (e.g., temperature scaling) to compare with Neural Clamping.

What is Calibration?

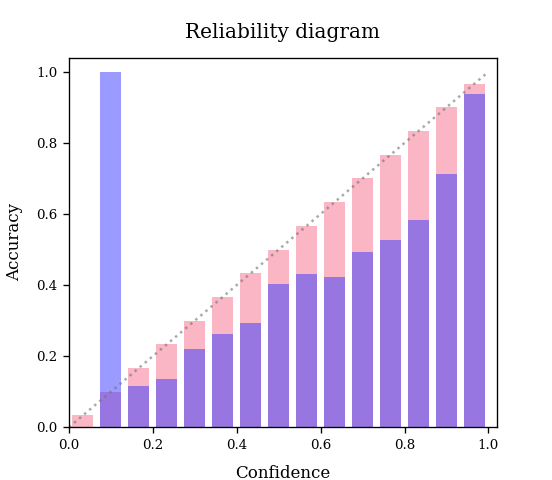

Neural Network Calibration seeks to make model prediction align with its true correctness likelihood. A well-calibrated model should provide accurate predictions and reliable confidence when making inferences. On the contrary, a poor calibration model would have a wide gap between its accuracy and average confidence level. This phenomenon could hamper scenarios requiring accurate uncertainty estimation, such as safety-related tasks (e.g., autonomous driving systems, medical diagnosis, etc.).

Calibration Metrics

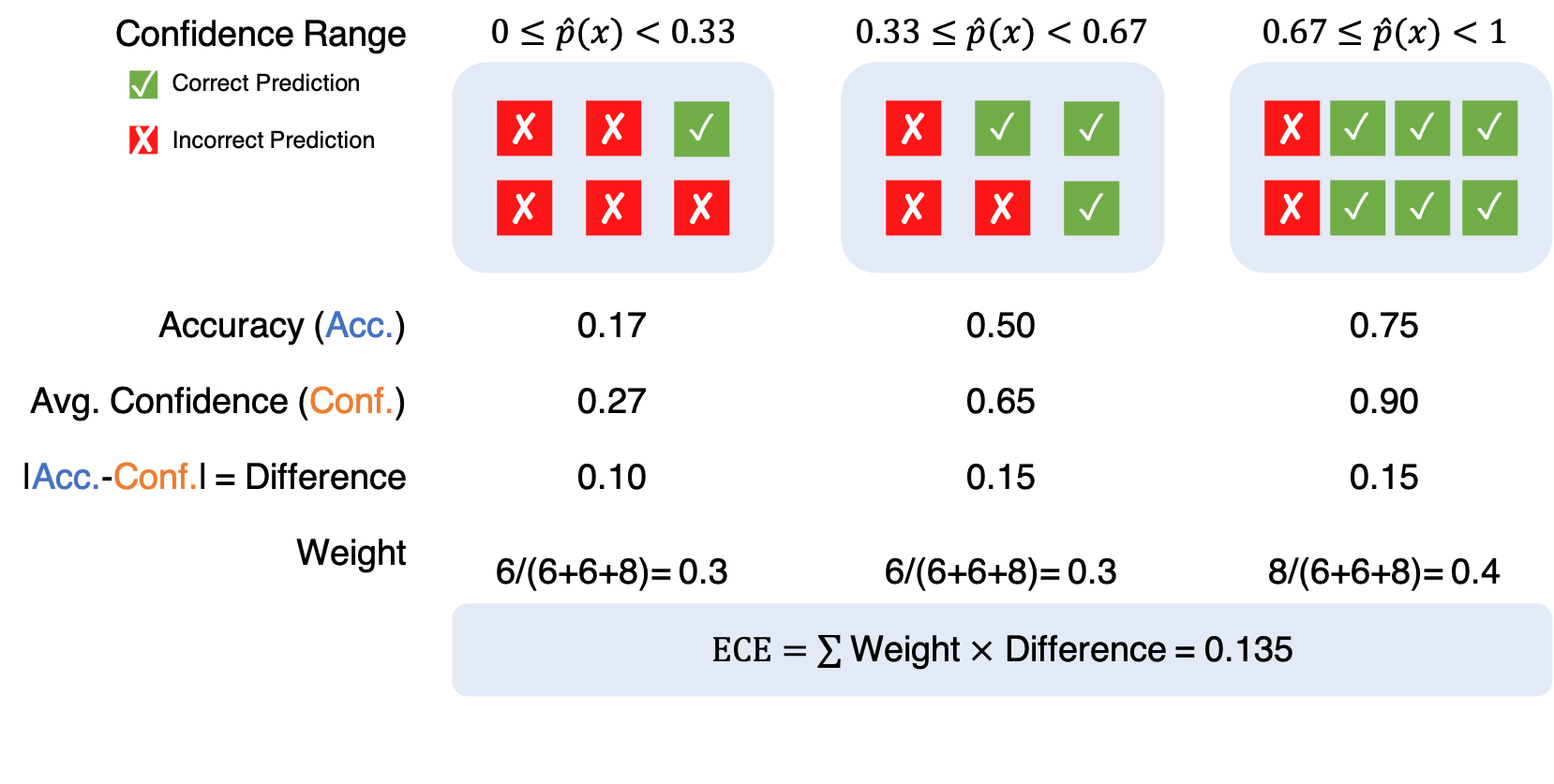

Objectively, researchers utilize Calibration Metrics to measure the calibration error for a model, for example, Expected Calibration Error (ECE), Static Calibration Error (SCE), Adaptive Calibration Error (ACE), etc.

Proposed Approach: Neural Clamping

Demonstration

In the current research, a reliability diagram is drawn to show the calibration performance of a model. However, since reliability diagrams often only provide fixed bar graphs statically, further explanation from the chart is limited. In this demonstration, we show how to make reliability diagrams interactive and insightful to help researchers and developers gain more insights from the graph. Specifically, we provide three CIFAR-100 classification models in this demonstration. Multiple Bin numbers are also support

We hope this tool could also facilitate the development process.

Use NCTookit to Calibrate Your Own Models

Quick Start by running the following code! Or, .

Using this tool, users can use our proposed package, \(\texttt{NCTookit}\), to calibrate the model.

# !pip install -q git+https://github.com/yungchentang/NCToolkit.git

from neural_clamping.nc_wrapper import NCWrapper

from neural_clamping.utils import load_model, load_dataset, model_classes, plot_reliability_diagram

# Load model

model = load_model(name='ARCHITECTURE', data='DATASET', checkpoint_path='CHECKPOINT_PATH')

num_classes = model_classes(data='DATASET')

# Dataset loader

valloader = load_dataset(data='DATASET', split='val', batch_size="BATCH_SIZE")

testloader = load_dataset(data='DATASET', split='test', batch_size="BATCH_SIZE")

# Build Neural Clamping framework

nc = NCWrapper(model=model, num_classes=num_classes, ...)

# Calibrated using Neural Clamping

nc.train_NC(val_loader=valloader, epoch='EPOCH', ...)

# General Evaluation

nc.test_with_NC(test_loader=testloader)

# Visualization

bin_acc, conf_axis, ece_score = nc.reliability_diagram(test_loader=testloader, rd_criterion="ECE", n_bins=30)

plot_reliability_diagram(conf_axis, bin_acc)

Citations

If you find Neural Clamping helpful and useful for your research, please cite our main paper as follows:

@inproceedings{hsiung2023nctv,

title={{NCTV: Neural Clamping Toolkit and Visualization for Neural Network Calibration}},

author={Lei Hsiung and Yung-Chen Tang and Pin-Yu Chen and Tsung-Yi Ho},

booktitle={Proceedings of the Thirty-Seventh AAAI Conference on Artificial Intelligence},

publisher={Association for the Advancement of Artificial Intelligence},

year={2023},

month={February}

}

@misc{tang2022neural_clamping,

title={{Neural Clamping: Joint Input Perturbation and Temperature Scaling for Neural Network Calibration}},

author={Yung-Chen Tang and Pin-Yu Chen and Tsung-Yi Ho},

year={2022},

eprint={2209.11604},

archivePrefix={arXiv},

primaryClass={cs.LG}

}