news

- 05/2023 - Code and Pre-trained models are released at our GitHub Repo.

- 02/2023 - This project is accepted to CVPR 2023 (Vancouver, Canada). [Paper] [arXiv]

- 12/2022 - This project was presented at IBM Expo during NeurIPS 2022 (New Orleans, USA).

- 04/2022 - This project was accepted to present at IJCAI 2022 Demo Track (Vienna, Austria). [Demo Paper]

Introduction

The adversarial attacks have been widely explored in Neural Network (NN). However, previous studies have sought to create bounded perturbations in a metric manner. Most such work has focused on \(\ell_{p}\)-norm perturbation (i.e., \(\ell_{1}\), \(\ell_{2}\), or \(\ell_{\infty}\)) and utilized gradient-based optimization to effectively generate the adversarial example. However, it is possible to extend adversarial perturbations beyond the \(\ell_{p}\)-norm bounds.

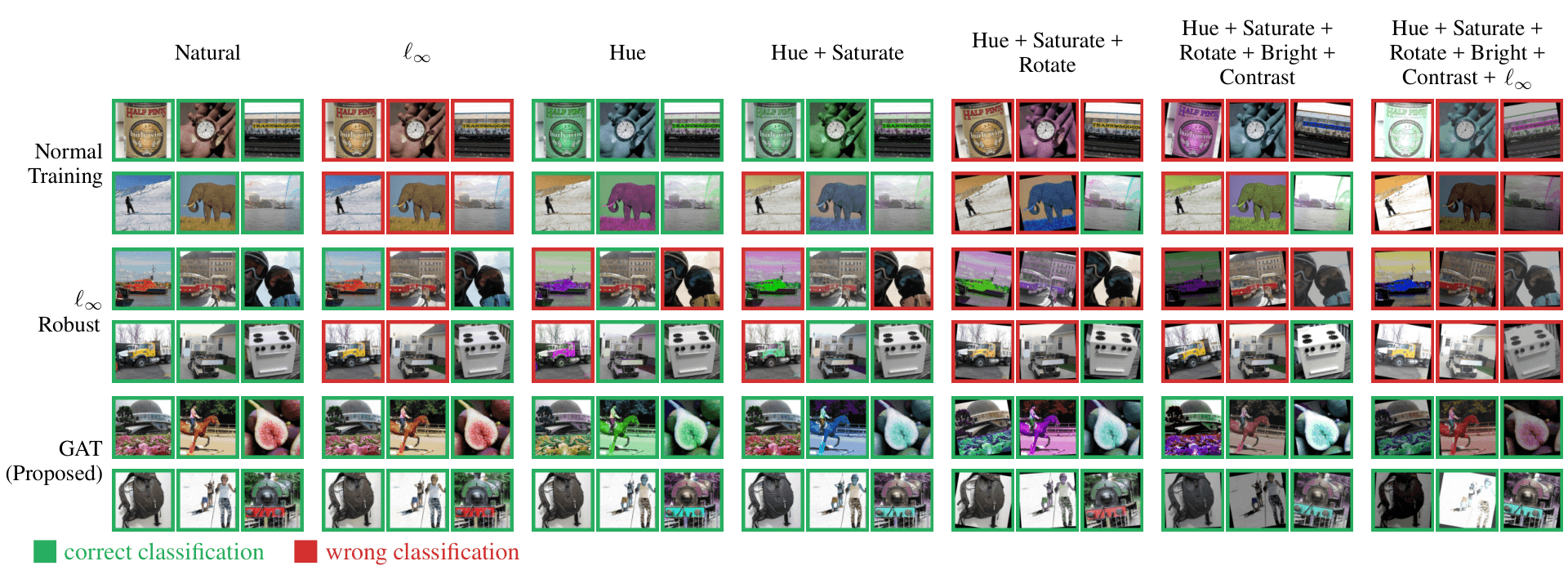

We combined the \(\ell_\infty\)-norm and semantic perturbations (i.e., hue, saturation, rotation, brightness, and contrast), and proposed a novel approach – composite adversarial attack (CAA) – capable of generating unified adversarial examples. The main differences between CAA and previously proposed perturbations are a) that CAA incorporates several threat models simultaneously, and b) that CAA’s adversarial examples are semantically similar and/or natural-looking, but nevertheless result in large differences in \(\ell_{p}\)-norm measures.

Figure. The Composite Adversarial Attack (CAA) Framework.

Figure. The Composite Adversarial Attack (CAA) Framework.

To further demonstrate the proposed idea and familiarize other researchers with the concept of composite adversarial robustness, and ultimately, create more trustworthy AI, we developed this browser-based composite perturbation generation demo along with the adversarial robustness leaderboard, CARBEN (composite adversarial robustness benchmark). CARBEN also features interactive sections which facilitate users’ configuration of the parameters of the attack level and their rapid evaluation of model prediction.

Demonstration

I. Composite Perturbations with Custom Order

Try to change the attack order by moving the perturbation blocks with your mouse. Then, click Generate to see the perturbed images. We provide several samples and the inference results from an \(\ell_{\infty}\)-robust model. Note that the picture below the perturbation blocks contains all perturbations before it.

- Original

- Hue

- Saturation

- Rotation

- Brightness

- Contrast

- \(\ell_{\infty}\)

- Warplane (82%)

- Warplane (79%)

- Warplane (54%)

- Wing (40%)

- Wing (40%)

- Wing (56%)

II. Craft Your Desired Composite Perturbations

1. Select a model

- Trained and tested on ImageNet

- Architecture: ResNet-50

- Clean Accuracy: 76.13%

- Robust Accuracy (Auto Attack: \(\ell_\infty\), 4/255): 0.0%

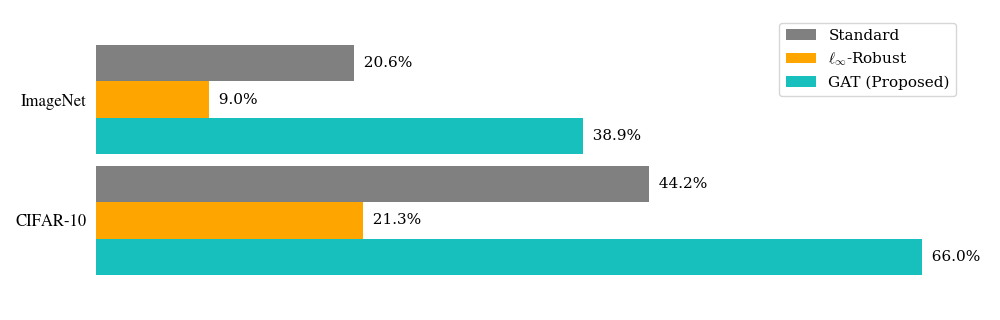

- Robust Accuracy (Composite Semantic Attack): 20.6%

2. Select an image

3. Specify Perturbation Level (Real-time Rendering)

Here we render the perturbed images using Composite-Adv (GitHub). We also

support custom attack order, which means the following attack panels are adjustable; please drag the corresponding

attack to the desired position, and the perturbed image will be rendered according to the attack order from top to

bottom.

- Ground Truth:

- Model Prediction:

4. Robustness Statistics

Evaluate model robustness from all test sets. The following chart represents the semantic attacks (w/o \(\ell_\infty\)) robust

accuracy of the models. Currently, we support two datasets: CIFAR-10 and ImageNet.

Use CAA to Evaluate Your Own Models

Quick Start by running the following code! Or,

# !pip install git+https://github.com/IBM/composite-adv.git

from composite_adv.utilities import make_dataloader, make_model, robustness_evaluate

# Load dataset

data_loader = make_dataloader('./data/', 'cifar10', batch_size=256)

# Load a model

model = make_model('resnet50', 'cifar10', checkpoint_path='PATH_OF_CHECKPOINT')

# Evaluate the Compositional Adversarial Robustness of the model

from composite_adv.attacks import CompositeAttack

# Specify the Composite Attack parameters (e.g., Full Attacks)

# 0: Hue, 1: Saturation, 2: Rotation, 3: Brightness, 4: Contrast, 5: L-infinity (PGD)

composite_attack = CompositeAttack(model,

enabled_attack=(0,1,2,3,4,5),

order_schedule="scheduled")

accuracy, attack_success_rate = robustness_evaluate(model, composite_attack, data_loader)

Benchmarks and Leaderboards

We track the models’ adversarial robustness with \(\ell_\infty\) (AutoAttack; Croce and Hein) and Composite Adversarial Attacks (CAA; Hsiung et al.). Specifically, we ranked the adversarial robustness with the robust accuracy (R.A.) of Full Attacks. The epsilons of \(\ell_\infty\) were set to 8/255 for CIFAR-10 and 4/255 for ImageNet. Join Leaderboard?

CIFAR-10

ImageNet

Want to Join the Leaderboard?

If you would like to submit your model, please follow the instructions in CARBEN-Leaderboard.ipynb to evaluate your model. After completing the robustness assessment, please fill in the Google Form, and we will update the leaderboard after confirmation.

Citations

If you find our work helpful or inspiring to your research, please cite our paper as follows:

@inproceedings{hsiung2022caa,

title={{Towards Compositional Adversarial Robustness: Generalizing Adversarial Training to Composite Semantic Perturbations}},

author={Lei Hsiung and Yun-Yun Tsai and Pin-Yu Chen and Tsung-Yi Ho},

booktitle={{IEEE/CVF} Conference on Computer Vision and Pattern Recognition, {CVPR}},

publisher={{IEEE}},

year={2023},

month={June}

}

@inproceedings{hsiung2022carben,

title={{CARBEN: Composite Adversarial Robustness Benchmark}},

author={Lei Hsiung and Yun-Yun Tsai and Pin-Yu Chen and Tsung-Yi Ho},

booktitle={Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, {IJCAI-22}},

publisher={International Joint Conferences on Artificial Intelligence Organization},

year={2022},

month={July}

}